ACCESSTECH: Experiencing Access with Interactive Technologies

ACCESSTECH investigates the deeper theories behind access as a component affecting interaction with technologies for disabled people through Participatory Research through Design. We approach experiences of access along four paths of inquiry: 1) We identify the needed research and design parameters enabling us to produce knowledges about access-enabling technologies. 2) We establish which methods are required to design and develop critical technologies that are rooted in disability cultures as well as accepted and desired by disabled people. 3) We explore a range of different technologies to understand how they afford different kinds of access experiences. 4) We conceptualise and articulate access experiences as a distinct aspect shaping the interactive characteristics of modern technologies on a theoretical level. Each of these paths informs disability centred practices and theories in HCI, though, collectively, ACCESSTECH represents a fundamental paradigm shift in the ways we encounter disabilities and technologies. See more info at the dedicated website here. — Project lead, funded by the European Research Council (ERC) under the European Union’s Horizon Europe research and innovation programme (ERC Starting Grant 101117519).

AI Ethics for Chatbots

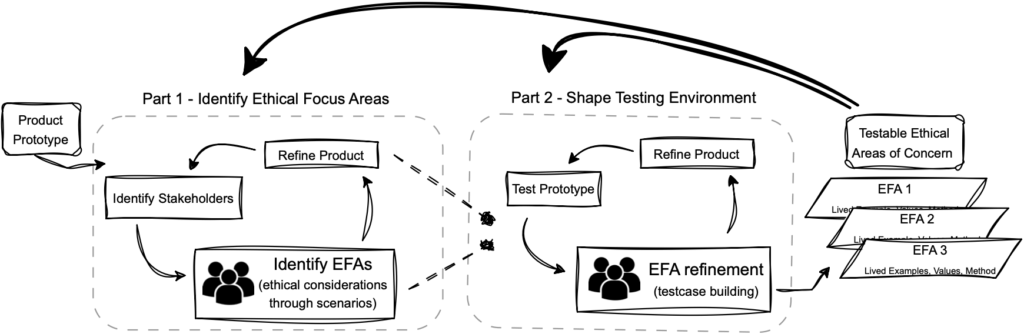

Since ChatGPT has risen to prominence in November 2022, Large Language Models (LLM) have garnered attention beyond the discipline of Computer Science and persuaded multiple stakeholders to develop individualised LLM-based Chatbots. Despite the critique this technological dispositif has received, for example for being environmentally wasteful and negligent of marginalised people’s often harmful representation in the source material, they have found their way into multiple services, be they offered through public or private stakeholders. Regardless, they do exhibit remarkable capabilities, particularly when it comes to creating text about common issues or facts, as long as the prompts are fitting. Being asked about absurd questions, these models are quickly ready to confidently explain the 20 allied countries in World War II or delineate, in detail, how dinosaurs ruled Austria in 1986. The resulting confident yet nonsensical and potentially harmful responses are called `artificial hallucinations’. We develop a structured approach for the developers of Chatbots to involve different stakeholders throughout the development process to ethically reflect and consider these and other aspects in their designs. — Project lead together with Kees van Berkel, funded by the Faculty of Informatics of TU Wien.